SelphID SDK Technical Specifications

1. Introduction

This document outlines the technical requirements for incorporating the FacePhi SelphID SDK into your systems.

The following are the provided components and the steps required to install the SDK on Windows and Linux operating systems.

2. Technical Specifications

The following sections show the minimum and recommended hardware and software requirements for installing the SDK at the server side, as well as the technical specifications of the built-in facial recognition algorithm.

2.1 Hardware requirements (SDK)

The following tables show the minimum and recommended hardware requirements for installing the SelphID SDK on different operating systems:

2.1.1.Hardware requirements

Hardware requirements without passive liveness for SelphID SDK SSE4 and FMA3:

| Minimum requirement | Recommended requirement |

|---|---|

| 6th to 11th generation Intel Core or Intel Xeon 2.5Ghz processors with technology SSE4.2 | 2 CPU Intel Xeon E5 3.5GHz with FMA3 technology |

| 4 GB RAM | 16 GB RAM |

| 2 GB disk | 10 GB disk |

Hardware requirements with passive liveness for SelphID SDK SSE4 and FMA3:

| Minimum requirement | Recommended requirement |

|---|---|

| 6th to 11th generation Intel Core and Intel Xeon processors with technology SSE4.2, AVX2 | 16 CPU Intel Xeon E5 3.5GHz with SSE4.2, FMA3 and AVX2 technology |

| 8 GB RAM | 32 GB RAM |

| 20 GB disk | 50 GB disk |

IMPORTANT:

In case of integrating the passive liveness functionality of the SelphID SDK, it is necessary that the images used have a minimum resolution of 720p (HD resolution), in order for the results to be according the metrics shown in the Liveness Detector section of this document.

2.2 Software requirements

The following tables show the minimum software requirements for installing the SelphID SDK on different operating systems:

2.2.1 Software requirements SelphID SDK SSE4 and FMA3 (Windows x64)

| Minimum software requirements |

|---|

| Windows 10 / Windows Server 2016 |

| Visual C++ 2017 x64 |

| Java 1.8 (Recommended Oracle JDK) |

| .Net Framework 4.0 |

2.2.2 Software requirements SelphID SDK SSE4/FMA3 (Linux Ubuntu)

| Minimum software requirements |

|---|

| Ubuntu 18.04 or compatible |

| Glib 2.26, libcurl 7.58.0 |

| Gcc 7.3.0 (Only for C++ SDK version) |

| Java 1.8 (Oracle JDK recommended) |

2.3 Reliability statistics of the facial recognition algorithm

Below are shown several tests to evaluate the reliability of SelphID SDK 6.13.0. The aim is to understand the operation and behaviour of the SDK by obtaining objective measures of the reliability and accuracy of the face recognition software. To this end, experiments have been carried out with a widely used public database: NIST Face Recognition Grand Challenge ( FRGC ) [2].

The experiments carried out show results in authentication or verification processes:

- Verification (1:1): 'Are you who you say you are?': Determines if when comparing two biometric samples (facial templates) a score above a threshold is generated, which implies that the system has positively verified that you are who you say you are. These systems are known as 1:1 since the facial pattern to be evaluated is compared only with your facial template, obtaining a 'yes' or 'no' response.

- Verification (1:N): 'Who are you?': In this operational mode, the biometric sample is compared to a list of stored patterns in a database. It is also known as 1:N because the identity must be correctly located among a number N of patterns with different identities against which it will be compared.

Two sets of images are used to evaluate these modes of operation: One Test set (Query set) that simulates unknown users trying to access the system, and a Registration set with previously registered legal users (Target set) to be compared with.

The results obtained in these experiments follow the standard measures defined in the international standard ISO 19795-1 3.

2.3.1 Verification 1:1

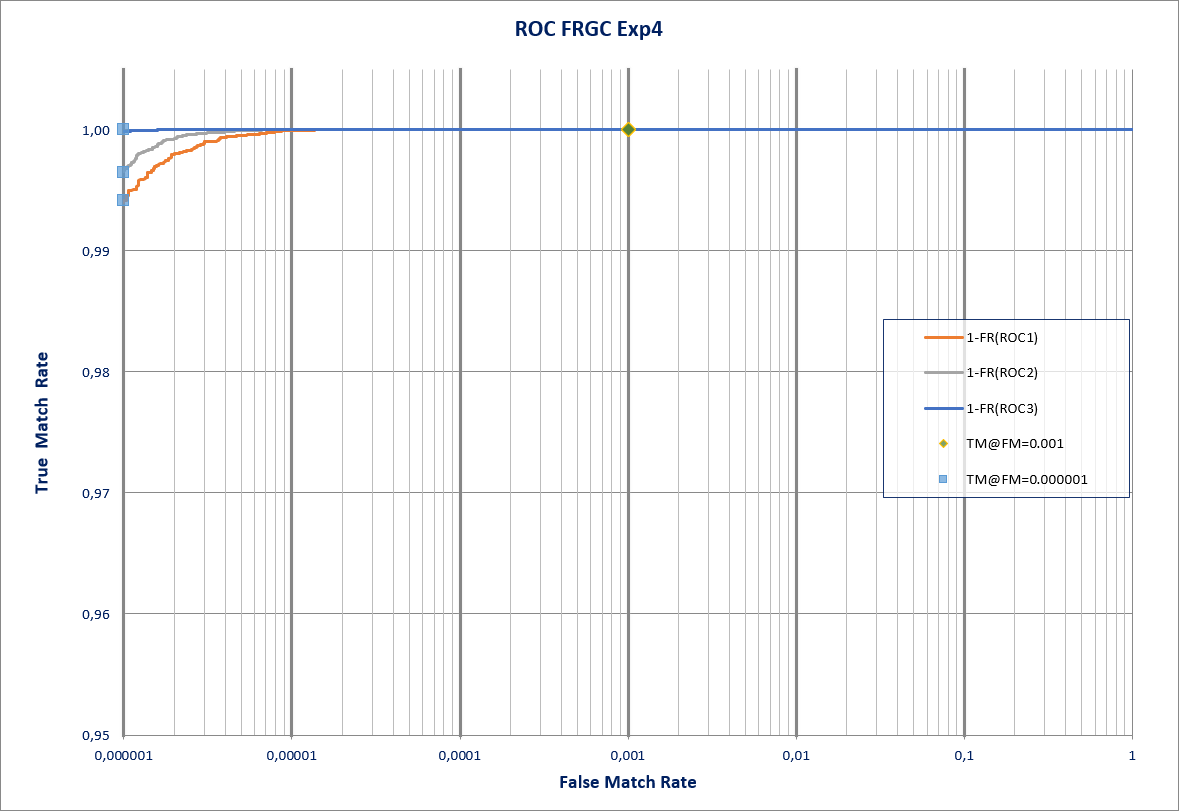

2.3.1.1 Uncontrolled Conditions (FRGC Exp 4)

This experiment measures the strength of the technology versus images captured in uncontrolled conditions. The image sets to be used are extracted from the FRGC v2.0 database [2]. The evaluation consists of a 'target set' of images in controlled conditions, and a 'query set' of images captured in non-controlled conditions. They are the same as those used in Experiment 4 pre-set in the mentioned database [7]:

- The FRGC 'Target Set'-Experiment 4 is made up of 16,028 images belonging to 466 users.

- The 'Query Set' of FRGC corresponds to a test set containing a total of 8,014 images.

- The total number of comparisons made exceeds 128 million.

The following table shows the most important aspects of the experiment, as well as the True Match Rate (TMR) setting the False Match Rate (FMR) to 0.1 % [3]:

| Verification under uncontrolled conditions (128M comparisons) | TMR with FMR = 0.1% |

|---|---|

| ROC I | 99.9999% |

| ROC II | 99.9999% |

| ROC III | 99.9999% |

Table 1. Results of the experiment using images under uncontrolled conditions.

The following chart shows the results obtained in the experiment using ROC (Receiver Operating Characteristic) curves [3]. Three ROC1 curves assessing the performance of the algorithm by using images obtained over time are shown. In addition to the TMR with FMR=0.1%, for each ROC curve additional markers were added highlighting the high security application points2.

2.3.2 Identification 1:N

2.3.2.1 Search for Subjects in List

In this experiment the reliability of the SDK for a Closed Set Identification3 application will be evaluated. For this purpose, we will use Experiment 1 from the FRGC 2.0 database [2]. In this case, a single image will be used to create the biometric pattern. The size of the Test and Registration sets is 16.028 samples each (257 million comparisons).

For each example of the test set we are going to ask the system to return the N biometric samples of the registration set that have the greatest similarity. Two conditions must be met for the system to correctly identify the user:

- The correct identity must be ranking R (Rank) the Candidate List L (Candidate List).

- The similarity must be greater than the security threshold T (Threshold). These thresholds correspond to the Security Thresholds provided by FacePhi.

To make the test more demanding, the Rank has been reduced to the minimum (R=1) and the highest possible list has been used, i.e. L=16,028 (it is searched against the whole database). In summary, to be considered a hit the person must be ranking the Candidate List from the entire database, this is what is more widely known as Rank 1 Hit Rate 4 4.

Reliability measures are based on obtaining the True Positive Identification Rate (TPIR) [3] for different levels of security set by the threshold.

| Identity search for a given list | ||

|---|---|---|

| Size of the biometric template | 1322 bytes | |

| TPIR(R=1), Rank1 | NIST FRGC Exp 1 | 98.7272% |

Table 2. Rank 1 of the identification experiment.

2.4 Performance statistics

The following figure shows the performance statistics made on SelphID SDK, in terms of times obtained in the following modules:

- Passive Liveness

- Extraction of biometric templates.

- Facial matching (1:1) with templates and images.

- Facial matching (1:N) with templates.

To carry out the measurements of liveness, extraction, 1:1 matching and 1:N matching, a device with the following characteristics was used:

- Intel(R) Xeon(R) Platinum 8275CL CPU 3.00GHz

- 16 Core processor

- 32Gb RAM

- Linux Ubuntu 18.04.6 LTS

The obtained data are shown for different processors (SSE4 and FMA3) in figures 1 and 2.

Biometric Extraction (640x480 image) |

Matching (1:1) with templates raw |

Matching (1:1) with images (640x480) |

|

|---|---|---|---|

| Performance | 96 ms | 181 ms | 195 ms |

Figure 1. Data obtained for SSE4 processors on SelphID SDK Linux.

Notes:

- On a Windows server the data obtained may vary by +-10%

Biometric Extraction (640x480 image) |

Matching (1:1) with templates raw |

Matching (1:1) with images (640x480) |

|

|---|---|---|---|

| Performance | 96 ms | 181 ms | 195 ms |

Figure 2. Data obtained for FMA3 processors on SelphID SDK Linux.

Notes:

- On a Windows server the data obtained may vary by +-10%

Performance statistics for liveness are shown below:

| Passive livenessCPU | |

|---|---|

640x480 image |

233 ms |

1280x720 image |

266 ms |

Figura 3. Data obtained with SelphID SDK Linux version.

Performance data for gallery creation and 1:N identification are shown below. The obtained data are shown for different processors (SSE4 and FMA3) in figures 3 and 4.

| Create gallery | Identification | |

|---|---|---|

| templates/second | 72.000 | 6.500.000 |

Figure 4. Data obtained for SSE4 processors on SelphID SDK Linux.

Note: on a Windows server the data obtained may vary by +-10%

| Create gallery | Identification | |

|---|---|---|

| templates/second | 72.000 | 6.500.000 |

Figure 5. Data obtained for FMA3 processors on SelphID SDK Linux.

Note: on a Windows server the data obtained may vary by +-10%

3. Passive Liveness Detection

Techniques used by fraudsters to impersonate legitimate users are constantly growing and are known as Attacks. As these applications are integrated into critical security systems, it is necessary to provide these applications with Presentation Attack Detection (PAD) and Manipulation Attack Detection (MAD) security measures, as it is vital to determine whether the biometric patterns presented to the application comes from a genuine ("bona fide") user or not.

The main objective is to provide facial recognition algorithms with the necessary level of security to prevent the fraudulent use of a user's facial characteristics taken from photographs and/or videos during identification, and thus prevent mass fraud.

Facephi technology ensures that the biometric pattern obtained from the user's face has been extracted from a bona fide biometric presentation, discarding those obtained from an attacker presenting a paper, photographs, videos, masks, and/or injecting manipulated images to the capture system.

Facephi has implemented a secure and easy to use system to be able to access the system in less than a second with complete security, faster and easier than memorizing a password and a user. The perfect balance between security and ease of access.

|

|

Using Facephi's Deep Learning techniques, this new tool determines if the image comes from a genuine user access or, otherwise, from a reproduction by means of printed photos, manipulated images, from a mobile device, tablet or pc. This tool is complemented by the solid facial recognition system provided in Selphi and SelphID. The main advantage of this tool is that it highly improves the user experience, since the user only has to stay in front of the camera and take a Selfie, without additional collaboration. |

|---|

FaceLiveness is based on Machine Learning algorithms that are trained to extract the features contained in a selfie image (e.g. artefacts, warping, texture, depth, light reflectance, resolution inconsistencies, blood pulse, etc.) that lets the classification module to discriminate between a bona fide presentation (also known as genuine), versus an attack (e.g. paper, photo, selfie/video replay, mask, etc.).

A Passive Attack Detection system requests no action from the user, and it's completely based in Deep Learning techniques. Therefore, introduces no additional friction to the verification and authentication process, are faster than active systems, and at the same time, with the capture of a simple selfie (or a few frames extracted from a short video), introduces additional complexity to the system translated into a higher capacity to detect a wide range of attacks, whether they are handcrafted or digitally manipulated.

3.1 Presentation Attack Detection

Facephi Passive Presentation Attack Detection (PAD) system (FaceLiveness) determines whether the access is being performed by a genuine (bona fide) user or whether the access is being performed by an attacker presenting a paper, photo, video or mask representing the biometric characteristics of the target that is trying to impersonate.

3.2. Manipulation Attacks Detection

Facephi Manipulation Attack Detection (MAD) system (FaceLiveness) determines whether the access is being perfomend by a genuine (bonafine) user or whether the access is performed by an attacker injecting a manipulated image into the system with an imagen that has been modified.

Facephi’s new technology is able to prevent againsts:

-

Face Swap manipulation: Superimposing a person's face onto a photo or video of another person.

-

Synthetic face (deepfake): Synthetic face generated using deep learning methods, such as generative adversarial networks (GANs) or Diffusion networks.

3.3. Metrics based on ISO/IEC 30107-3

The ISO/IEC 30107-1:2023 (Information technology — Biometric presentation attack detection — Part 1: Framework), and the ISO/IEC 30107-3:2023 (Part 3: Testing and reporting) sets the standards for establishing the specifications, characterizations, and evaluations of presentation attacks (Type 1) that takes place at the sensor (whether it is on a smartphone or a desktop camera) during the collection of the biometric traits (e.g. a selfie, or a video). The most important metrics to evaluate the performance of a Presentation Attack Detection (PAD) system are:

APCER - Attack Presentation Classification Error Rate: proportion of attack presentations using the same presentation attack instrument (PAI) species incorrectly classified as bona fide presentations by a presentation attack detection (PAD) subsystem in a specific scenario.

BPCER - Bona Fide Presentation Classification Error Rate: proportion of bona fide presentations incorrectly classified as presentation attacks in a specific scenario.

PAIs - Presentation Attack Instrument: biometric characteristic or object used in a biometric presentation attack.

Bona fide presentation: biometric presentation without the goal of interfering with the operation of the biometric system.

Presentation Attack: presentation to the biometric capture subsystem with the goal of interfering with the operation of the biometric system.

| SAMPLES | APCER % | |

|---|---|---|

| Paper print | 16577 | 0.08 % |

| Screen replay | 42724 | 0.57 % |

| Mask | 11117 | 0.89 % |

| Face swap manipulation | 12198 | 35.65 % |

| Synthetic face | 38529 | 7.35 % |

Attack Presentation & Manipulation Classification Error Rate (APCER) with paper, mask and device (photo on screen) test samples. Metrics obtained as an average between internal and public datasets.

| SAMPLES | BPCER % | |

|---|---|---|

| Bona fide accesses | 17607 | 1.64 % |

Bona Fide Presentation&Manipulation Classification Error Rate (BPCER) with genuine test samples

4. Technology usage requirements

| Specifications | Recommended Requirements | FPAD Minimum Requirements | FREC Minimum Requirements |

|---|---|---|---|

| Resolution | 1080P | - | - |

| IOD | IOD>80 | IOD>60 | IOD > 30px |

| Compression | Uncompressed format | JPEG low compression (70%) (*) | - |

| Color | Color | Color | Gray Scale |

| Pose | < 5° (Yaw, Pitch, Roll) | < 15° (Yaw, Pitch, Roll) | < 45° (Yaw, Pitch, Roll) |

| Face Position vs. Background | Full Frontal Token Image | > 5% of the face width | > 8% of the face width |

(*) Estimated Values

5. References

[1] B. P. J. Phillips, P. J. Rauss, and S. Z. Der, 'FERET (Face Recognition Technology) Recognition Algorithm Development and Test Results', October 1996. Army Research Lab technical report 995.

[2] P. J. Phillips, P. J. Flynn, T. Scruggs, K. W. Bowyer, J. Chang, K. Hoffman, J. Marques, J. Min, and W. Worek, ' Overview of the Face Recognition Grand Challenge' , 2005. IEEE Conference on Computer Vision and Pattern Recognition.

[3] ISO/IEC 19795-1 standard: 'Information technology – Biometric performance testing and reporting – Part 1: Principles and framework', 2006

[4] S. A. Rizvi, P. J. Phillips, and H. Moon, 'The FERET Verification Testing Protocol for Face Recognition Algorithms', October 1998. Technical report NISTIR 6281.

[5] P. J. Phillips, H. Moon, S. A. Rizvi, and P. J. Rauss, 'The FERET Evaluation Methodology for Face-Recognition Algorithms', January 1999. Technical report NISTIR 6264.

[6] P. Grother, M. Ngan, 'Face Recognition Vendor Test (FRVT)', May 2014. NIST Interagency Report 8009.

[7] PJ Phillips, et al. 'Preliminary Face Recognition Grand Challenge Results' , 2006 - 7th International Conference on Automatic Face and Gesture Recognition.

-

ROC I: Target and Query images obtained within the same semester. ROC II: Target and Query images obtained within the same year. ROC III: Target and Query images obtained at least one year apart. ↩

-

Closed Set: The marker corresponds to an FMR of 0.000001 (999,999 accesses correctly denied from 1 million attack attempts). Even in this case the TMR is around 99% for ROC I. ↩

-

Spoofing attacks are usually photographs or videos where legitimate users appear posing or in movement and their biometric patterns can be extracted for identification (this also includes the use of artificial fingerprints, contact lenses with retinal patterns or voice recordings). ↩

-

IEC ISO/IEC 30107-3:2023 Information technology: Biometric presentation attack detection - Part 3: Testing and reporting ↩

-

Genuino: Legal User of the System who is present in front of the camera at the time of the biometric measurement. ↩

-

Those videos or photos taken without the knowledge or awareness of the recorded subject are considered 'non-collaborative'. ↩